Can You Have More Than One Independent Variable

Linear Regression. Back to Nuts.

Y'all accept been waiting for this since Day ane, ain't information technology? Because we like it anarchistic, we saved information technology for the last. Side by side 2 days also cover Regression .

Linear Regression is the oldest, unproblematic and widely used supervised machine learning algorithm for predictive analysis.

Every Data Scientist starts with this i. Then, here information technology is.

The path to your dreams is rarely linear. You lot gotta zigzag your way to happiness.

What is Linear Regression?

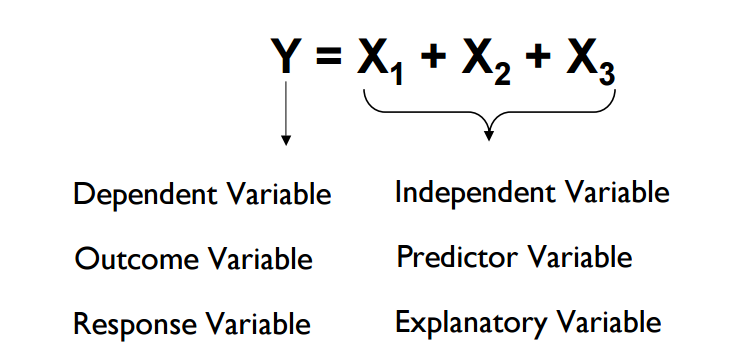

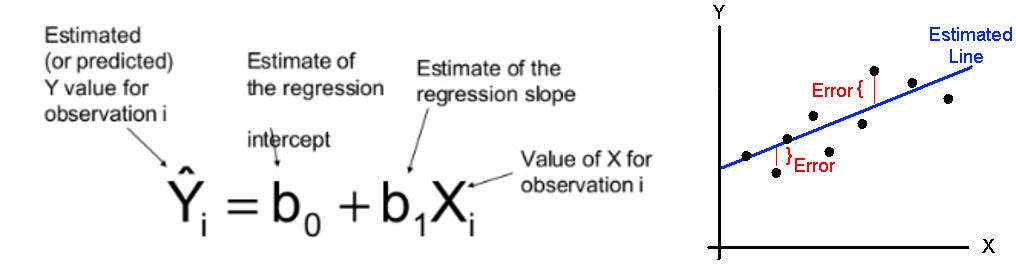

Information technology'southward a method to predict a target variable by plumbing equipment the best linear relationship between the dependent and contained variable.

What is the Best Fit?

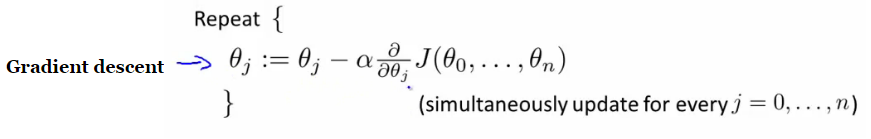

Information technology can be of any shape depending on the number of independent variables (a point on the axis, a line in 2 dimensions, a airplane in three dimensions, or a hyperplane in higher dimensions).

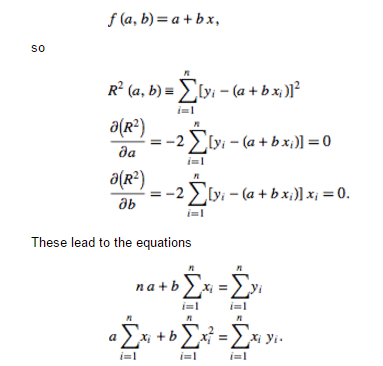

Least Squares Method: The best fit is done by making sure that the sum of all the distances between the shape and the actual observations at each point is as small as possible. The fit of the shape is "best" in the sense that no other position would produce less error given the option of shape.

If you desire to go into the mathematics, you tin can have a look below or if y'all detest mathematics, just skip!

Note: I have shown this method but for Simple Linear Regression. Yous may extend it for Multiple Linear Regression. Don't know what Uncomplicated and Multiple Linear Regressions are? No trouble, keep reading.

Application in Real Life

Selection any two things that you use in your daily life and that are related.

Like, I have information of my monthly spending, monthly income and the number of trips per calendar month for the terminal 3 years. Now I demand to respond the following questions:

- What will be my monthly spending for next year?

- Which gene(monthly income or number of trips per month) is more of import in deciding my monthly spending?

- How monthly income and trips per month are correlated with monthly spending?

Yep, you lot are right. Linear Regression volition come to your rescue.

Types of Linear Regression

Yes, nosotros are complicating it for you. Exist open to new stuff. :D

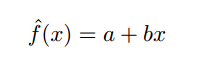

1. Simple Linear Regression

This method uses a single independent variable to predict a dependent variable past plumbing fixtures a best linear relationship.

2. Multiple Linear Regression

This method uses more than than one independent variable to predict a dependent variable by fitting a all-time linear relationship.

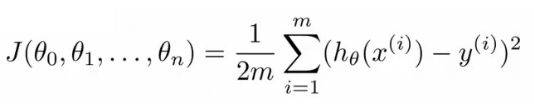

In case of Multiple Regression, the parameters tin exist constitute in the same way as that in the instance of simple linear regression, by minimising the cost function using:

- Gradient Descent: Given a office divers past a set of parameters, Slope Descent starts with an initial set of parameter values and iteratively moves towards a fix of values that minimise the function. This iterative minimisation is done using calculus, taking steps in the negative direction of the role gradient .

Note: Information technology works best when multicollinearity is absent. It's a miracle in which ii or more than predictor variables are highly correlated.

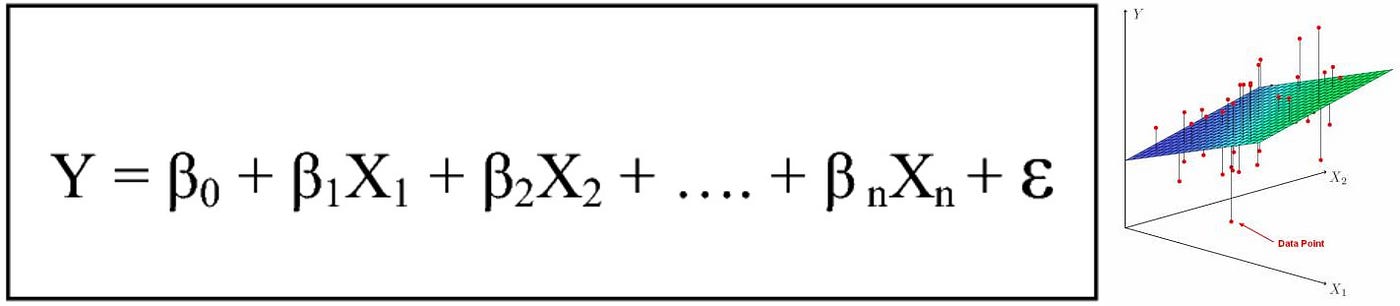

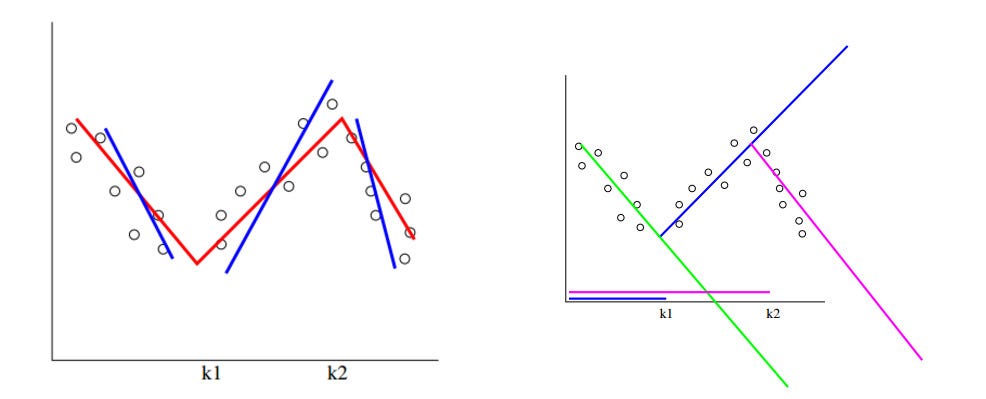

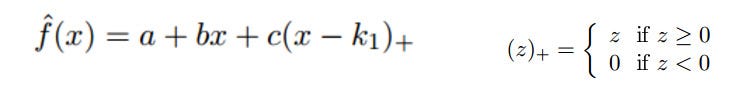

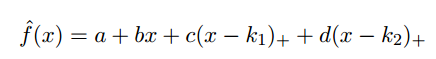

3. Linear Splines

Sometimes, Linear splines is used to reduce the problem to Linear Regression. In this method, we fit the data with a piece-wise linear function. Let united states of america suppose that the knots are at at k1 and k2 in the scatter plot as shown in the figures below. You may exist thinking that we can dissever the information into three groups using k1 and k2 and solve three regression issues (blueish lines in left figure). Simply as you can see, information technology does not assure continuity!

To brand the curve continuous , we can use the fact that any linear spline can be a linear combination of basis functions. Thus, the objective of the linear splines is to fit the red lines in the data (equally shown in left figure). So, we can build a piece-wise linear role step by step.

- Nosotros first starting time with a linear function for the points before k1 (light-green line in right figure).

- Side by side, we add up second role for the points between k1 and k2 (blue line in right figure).

- At terminal, we will add together a tertiary function (purple line in right figure) for the points later on k2.

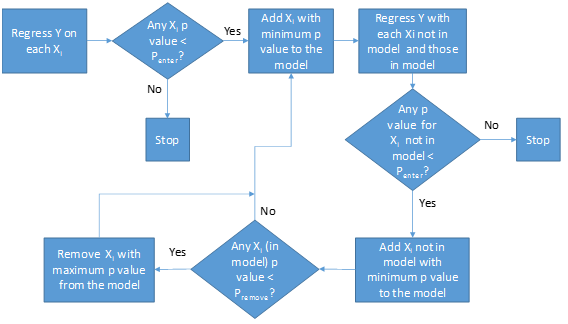

4. Stepwise Regression

This regression model is used when we have more than than i independent variable. It uses automated procedure to select important contained variables and in that location is no human intervention.

Forward Stepwise Regression

- Here, nosotros commencement with null model which ways it has no predictors, just 1 intercept (the mean over dependent variable).

- Now, fit p (full number of variables) simple linear regression models, each with ane of the variables in. Thus, nosotros take just searched through all the single variable models, the best 1 and stock-still this i in the model.

- Similarly, search through the remaining p-1 variables one past 1, but this time with that variable in the model which was selected in previous step. Now choose the model which will be best among the p-1 models.

- Continue until some stopping rule is satisfied like some threshold value of the number of variables to be selected.

Backward Stepwise Regression

- It starts with the full to the lowest degree squares model containing all p predictors.

- Now remove the variable with the largest p-value i.eastward. the least significant predictor.

- The new model shall have (p-1) variables. Remove the variable with largest p-value once more.

- Go along until some stopping rule is satisfied similar all variables take a p-value smaller than a threshold value.

So y'all can see, Stepwise Linear Regression is applying Multiple Linear Regression multiple times and selecting the important variables or removing the least pregnant predictors each fourth dimension.

Note 1: For Astern Stepwise Linear Regression or Multiple Linear Regression to piece of work fine, the number of observations (northward) should be more the number of variables(p). Information technology is considering we can do least squares regression only when due north is greater than p. For p greater than north, least squares model is not even defined.

Note 2: Automatic procedures may not choose the right significant variables from practical indicate of view as they don't have the special cognition the analyst might accept.

How to select the right regression model?

There is no perfect answer to this question because choosing the right linear regression model with a small sampled data is a difficult task. Well hither, I volition tell you some common statistical methods to get an thought which model all-time fits your data:

- Adjusted R-squared and Predicted R-squared Value :- Choose the model with the higher adjusted and predicted R-squared values. Unlike, the adapted and predicted R-squared values, which may increment or decrease on adding a predictor depending on the performance of model, regular R-squared value increases every time nosotros add together a predictor and may atomic number 82 to overly complex model.

- P-values for the predictors :- Variables with low p-values are the most significant variables.

- Mallows' Cp :- It compares the precision and bias of the total model to models with a subset of predictors. The smaller information technology is, the more precise are the estimates of the true regression coefficients of the model.

Note: Apart from the above statistical methods, I would suggest that cross-validation is the best style to evaluate models.

Implementation in Python

So, it is important to run across how to use what we have gained. I have practical Linear Regression on a self-created dataset in which I had recorded the monthly income, monthly trips and monthly spending for the final iii years.

Click Hither for the full code and see how easy is the world of Machine Learning when it comes to Linear Regression.

References

- Blog by Atomic Object

- Minitab Blog

- Stepwise Regression by Gerard Nico

Footnotes

Yep, this was a long one. But, the most important one too! We are nigh standing at the finish of 12A12D series. We promise you are learning.

Thanks for reading. :)

And, ❤ if this was a adept read. Bask!

Editor: Akhil Gupta

galliherthantly62.blogspot.com

Source: https://medium.com/data-science-group-iitr/linear-regression-back-to-basics-e4819829d78b

0 Response to "Can You Have More Than One Independent Variable"

Post a Comment